10.28.17 | Week 9. Blog Debrief

I don't think I'm going to be using it in the end, but to get some louder samples on the minimal hardware I have I was using toneAC for a little bit to get about double the maximum volume output. Connor Nishijima's got a volume level library that plays nice with his waveform synthesizer library for Arduino so I'm probably going to be using that. toneAC works by adjusting the waveform itself so you end up getting screwy output at lower volumes because they start sounding closer to saw waves, while the Nishijima library alternates between tone and ultrasonic frequencies so it sounds like traditional modification of volume.

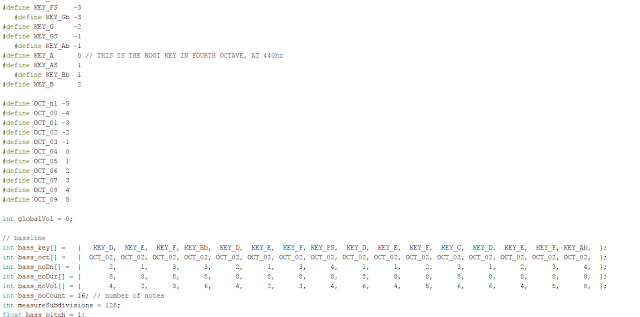

To try and get the ball rolling I went ahead and made good on my concept of taking a more programmatic / formulaic approach for producing frequencies based on traditional music theory, more specifically the chromatic scale. Most libraries I've seen online break up each and every key (which gives you anywhere from like 70-100 different notes, depending) and provide it with its own frequency, but there's really no point to set all of that up when formulas already exist to convert keys into frequencies. Since there's a set number of keys per octave, I worked off of the usual method of using A4 as a "root note" and worked positively / negatively from there.

In short, what this means is that specifying an octave and a key in said octave will provide you with the correct frequency at the time of producing the tone via the equation supplied in the link above. I go down to octave "-1" and up to the 9th octave, which aren't really a thing in actual music theory, but you could theoretically go as high or low as you want with the frequencies up until you hit the limit for 32-bit numbers.

As for the compositions themselves, I wrote out a simple tracker-like format to establish how they play out, which is a first for me since I normally use a WYSIWYG format for my own music production. Every note specifies a key, an octave, a "note down time", a "note duration time", and a volume (mimicking velocity in traditional MIDI). There's also a "note count" variable which allows you to cut off clips prematurely (which can be very useful for debugging or preventing desyncs if there's an error), a "measure subdivisions" variable which effectively determines the "precision" of the notes, and a pitch variable replicating PitchMod in MIDI.

Notes use a separate "down time" and "duration time" in order to avoid having to functionally double the workload by defining the empty spaces between notes. Instead of establishing break times, the "down time" is the length of the note, while the "duration time" is the length of the note plus the empty space following it.

"Measure subdivisions" is mainly a luxury to help make the transposing process from DAW to Arduino a bit easier: in my DAW, at least, the "snapping" feature for note lengths goes as precise as 1/64th of a measure, which means it's relative, and not based in an actual length of time. So, for example, at a measureSubdivisions value of 64 and a "note down" value of 32, the note would stay down for half a measure.

Here's a video using a single sine track replicating some video game music, which ended up being something like 88 notes total: link

Doing the lead to the Prime main theme was mainly a test on the amount of work involved transposing a (relatively) lengthy composition into my custom tracker format, which produces a melody a little over 30 seconds long. Manually transposing the theme from an existing MIDI of it took 5-10 minutes, which is well within the scope of being able to make a bunch of bite-sized tracks for the finished product.

To try and get the ball rolling I went ahead and made good on my concept of taking a more programmatic / formulaic approach for producing frequencies based on traditional music theory, more specifically the chromatic scale. Most libraries I've seen online break up each and every key (which gives you anywhere from like 70-100 different notes, depending) and provide it with its own frequency, but there's really no point to set all of that up when formulas already exist to convert keys into frequencies. Since there's a set number of keys per octave, I worked off of the usual method of using A4 as a "root note" and worked positively / negatively from there.

In short, what this means is that specifying an octave and a key in said octave will provide you with the correct frequency at the time of producing the tone via the equation supplied in the link above. I go down to octave "-1" and up to the 9th octave, which aren't really a thing in actual music theory, but you could theoretically go as high or low as you want with the frequencies up until you hit the limit for 32-bit numbers.

As for the compositions themselves, I wrote out a simple tracker-like format to establish how they play out, which is a first for me since I normally use a WYSIWYG format for my own music production. Every note specifies a key, an octave, a "note down time", a "note duration time", and a volume (mimicking velocity in traditional MIDI). There's also a "note count" variable which allows you to cut off clips prematurely (which can be very useful for debugging or preventing desyncs if there's an error), a "measure subdivisions" variable which effectively determines the "precision" of the notes, and a pitch variable replicating PitchMod in MIDI.

Notes use a separate "down time" and "duration time" in order to avoid having to functionally double the workload by defining the empty spaces between notes. Instead of establishing break times, the "down time" is the length of the note, while the "duration time" is the length of the note plus the empty space following it.

"Measure subdivisions" is mainly a luxury to help make the transposing process from DAW to Arduino a bit easier: in my DAW, at least, the "snapping" feature for note lengths goes as precise as 1/64th of a measure, which means it's relative, and not based in an actual length of time. So, for example, at a measureSubdivisions value of 64 and a "note down" value of 32, the note would stay down for half a measure.

Here's a video using a single sine track replicating some video game music, which ended up being something like 88 notes total: link

Doing the lead to the Prime main theme was mainly a test on the amount of work involved transposing a (relatively) lengthy composition into my custom tracker format, which produces a melody a little over 30 seconds long. Manually transposing the theme from an existing MIDI of it took 5-10 minutes, which is well within the scope of being able to make a bunch of bite-sized tracks for the finished product.

Comments

Post a Comment